1 Motivation & Key Idea

1.1 Background: Memory stalls in OoO processors

When a load instrcution misses the last level cache (LLC), the instruction often becomes the head of the reorder buffer (ROB), causing the processor to stall due to the full instruction window. This stall typically lasts for tens to hundreds of cycles, and becomes one of the main bottleneck for performance.

1.2 Motivation: Limitations of previous runahead

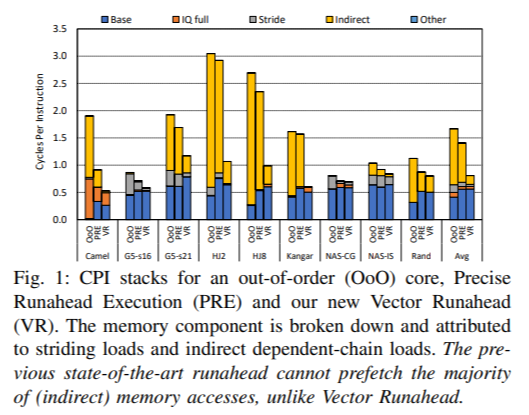

When a long-latency load instruction reaches the head of the instruction window and the window becomes full, the processor enters the runahead mode and speculatively generates future memory accesses. This has the effect of accurate prefetch, bringing data required by future load requests to the upper level cache, thereby contributing to performance improvement. Precise Runahead Execution (PRE) is the previous state-of-the-art runahead technique, which reduced the oveheads of transitioning between runahead and normal mode.

While PRE successfully prefetches a high fraction of future load requests, it fails to prefetch the majority of the indirect memory accesses. It is because the indirect memory access, dependent on the load request that caused the runahead mode, cannot be handled in runahead mode, consequently causing the processor to re-enter runahead mode soon, which stalls the processor again.

1.3 An illustrative example and key idea

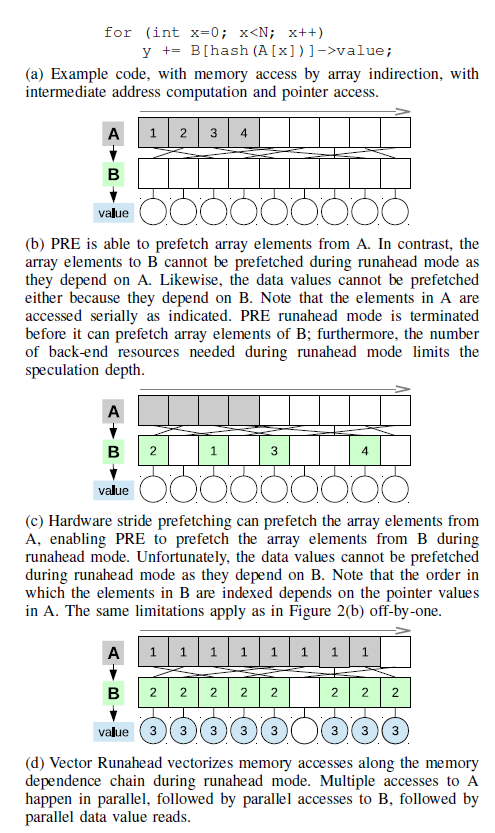

Code in Figure 2 (a) serves as an illustrative example for comparing PRE, PRE with HW prefetcher, and Vector Runahead.

As shown in Figure 2 (b), PRE can prefetch elements of array A in runahead mode, but accesses to array B and value can't be prefetched because these are dependent load instructions and would be invalidated in runahead mode. When returning to normal mode, the processor will soon stall agian due to the cache miss to array B.

PRE with HW stride prefetcher does better. As shown in Figure 2 (c), the HW stride prefetcher can capture and prefetch the simple stride access pattern, resulting in cache hits for accesses to array A in runahead mode, thereby enabling prefetching the elements of array B. However, value which is dependent on array B still cannot be prefetched. In summary, PRE with HW prefetcher can prefetch at most one more level of indirection in runahead mode. In addition to this limitation, the rate at which PRE can issue the load instruction in runahead mode is limited by the number of back-end resources. The processor might need to wait until there are avaliable back-end resources (e.g., instruction window) even if there are more future independent load instructions.

Vector Runahead continues runahead mode until all loads along the dependent load chain have been issued. In addition, it vectorizes the instruction stream for each dependent load chain. As shown in Figure 2 (d), accesses to array A, B, and the dependent data value that belong to the same vector are issued in parallel. After issuing all these load instructions, the processor returns to normal mode. Vectorization has two benefits: First, it increases the effective fecth/decode bandwidth. Second, it requires only one issue-queue slot for vectorized instructions, which were multiple scalar instructions in original code, thereby alleviating the constraints on back-end resource.

2 Implementation Details

Figure 3 shows the overall micro-architecture of OoO processor modified for Vector Runahead.

2.1 Entering vector-runahead mode

The stride detector maintains a table indexed by load PC, recording the observed stride, saturating counter, and PC of the final dependent load instruction for each entry.

The core enters runahead mode when the ROB or the issue queue is filled beyond the threshold. The current PC and the front-end RAT are checkpointed for recovery from runahead to normal mode. The processor accesses the stride detector for every load instruction, and if it captures stride pattern, the processor enters vector-runahead mode. It starts to vectorize the striding load instruction and the following instructions until another instance of a load with the same stride pattern is detected or the dependent load chain is complete.

2.2 Vectorizing instructions

The taint vector (TV) is a table that stores 2-bit entry for each architectural register. The Vectorize bit indicates whether the register depends on a vectorized operation. The Invalid bit indicates whether the register is invalid. (e.g., due to an unsupported operation or invalid source operands) If any source operand has either of these two bits set, the destination register also sets its corresponding bits. Instructions with the Vectorize bit set are vectorized, while other valid instructions are issued as conventional scalar operations.

The vectorizer generates a 512-bit vector load instruction from the current memory address and the captured stride. All following dependent arithmetic and load instructions are vectorized into their corresponding 512-bit versions. For a dependent load instruction, the terminator entry of the initial load instruction in the stride detector is updated. Instead of updating the state in the ROB, the register deallocation queue (RDQ), which is a new HW structure, is used for dellocating physical registers in runahead mode.

2.3 Vector unrolling and pipelining

With basic Vector Runahead, the processor runs in vector-runahead mode for only a limited period. The maximum number of executable instructions in vector-runahead mode is constrained by the vector width. (limited coverage during vector-runahead mode) Also, it can't issue enough load instructions simultaneously for the MSHR to be fully saturated. (limitied MLP) -> Figure 4 (a)

The Vector Runahead proposes vector unrolling. The processor continues in vector-runahead mode until it issues U, the unroll length, copies of the vectorized instruction sequence. It can issue the next vectorized sequence by incrementing the load address by the identified stride. -> Figure 4 (b)

Additionally, vector pipelining is introduced to increase the number of parallel memory accesses, thereby exploiting a higher degree of MLP. Rather than waiting for a previous round of Vector Runahead to terminate, the processor reorders loads belonging to subsequent copies and issues them simultaneously. This allows multiple independent memory accesses to be performed in parallel. -> Figure 4 (c)

2.4 Managing pipeline resources

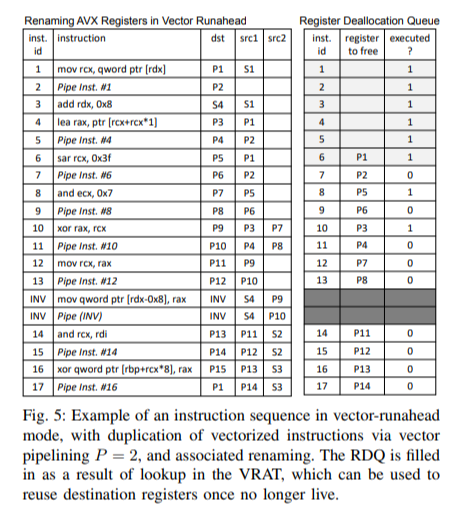

To process P copies of the vectorized instructions in a pipelined manner, where P is the pipeline depth, the processor must maintain a one-to-many mapping from an architectural register to its corresponding physical registers. This is handled by a new HW structure called the vector register allocation table (VRAT).

A physical register can be freed when a new instruction, writing to the same architectural register to which the physical register was mapped, commits. However, as the instruction doesn't commit in vector-runahead mode, a new HW structure called the register deallocation queue (RDQ) handles the deallocation(or freeing) of physical registers. An RDQ entry is assigned for each instruction, and the physical register corresponding to the destination register of the instruction is recorded in the entry because it will be freed when the instruction finishes execution.

2.5 Terminating runahead

Vector-runahead mode terminates when one of the following conditions is met: 1) encountering the same stride load again, 2) issuing the terminator, which is recorded in the Stride detector, or 3) all vector lanes are marked as invalid.

When vector-runahead mode terminates, the status of the entry into runahead mode is restored, and normal execution resumes.

'Paper Reading > CPU Microarchitecture' 카테고리의 다른 글

| [HPCA '03] O. Mutlu, Runahead Execution (0) | 2023.12.25 |

|---|---|

| [Review] M. Jalili, Reducing Load Latency with Cache Level Prediction, HPCA 2022 (0) | 2023.09.11 |

| [HPCA '22] M. Jalili, Reducing Load Latency with Cache Level Prediction (0) | 2023.09.07 |

| [ISCA '22] (2/2) S. Shukla, Register File Prefetching (0) | 2023.07.20 |

| [ISCA '22] (1/2) S. Shukla, Register File Prefetching (0) | 2023.07.17 |