1 Background

1.1 Virtual memory & Page table (PT)

Virtual memory designs allow any mapping from a virtual page to a physical page. The OS keeps a PT, which is a per-process data structure that records the virtual-to-physical mapping of the process. The PT is organized as a 4-level radix tree as shown in Figure 1, and the system sequentially accesses each level to find the corresponding phyiscal page number (PPN) of the virtual page number (VPN).

1.2 Memory management unit (MMU)

Address translation from VPN to PPN is performed by the MMU. Figure 2 shows 3 key components in the MMU: 2-level TLB, page table walker, and page table caches (PTC). L1I, L1D, and L2 unified TLB store recently-accessed page table entries (PTE), thus reducing the latency of page table walk (PTW) for those pages. Page table walker is a dedicated HW structure that performs PTW and PWC are caches for each intermediate level of the PT.

2 Overview

2.1 Limitations of previous works

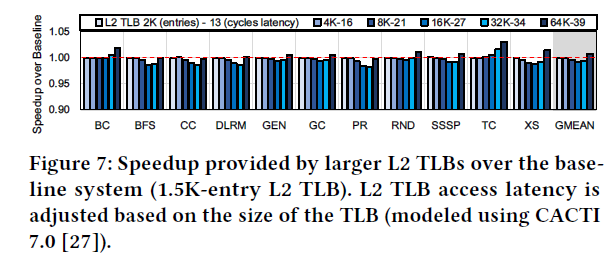

Increasing the size of L2 TLB from 1.5k to 64k results in a 44% reduction in MPKI. However, it also increases the access latency, resulting in an average speedup of only 1.08x as shown in the Figure 7.

Managing a large TLB with SW suffers from a long-latency in accessing TLB entries, because the processor should fetch them from the main memory. In addition, it requires contiguous physical address space.

2.2 Key idea

The paper suggests storing TLB entries in the existing cache hierarchy, particularly in the L2 cache. When accessing TLB entries that reside in the L2 cache, the latency of page translation can be significantly reduced compared to PTW. This idea doesn't pollute the existing L2 cache because a large fraction of cache blocks are not reused after being brought to the L2 cache as shown in the Figure 11.

2.3 Design overview

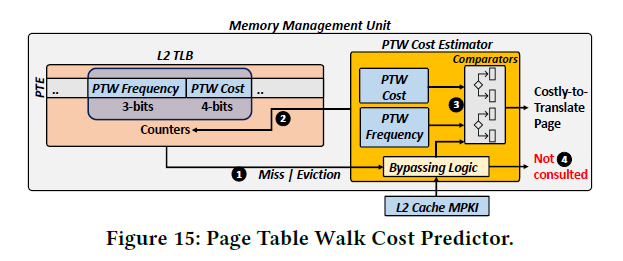

Victima suggests two main components. First, a PTW cost predictor (PTW-CP) predicts if the page translation is costly, and Victima only stores PTE of costly-to-translate pages in the L2 cache. Second, a TLB-aware cache replacement policy is implemented to prioritze important TLB entries over other cache data.

Figure 12 shows the overall address translation flow. On a L2 TLB miss, Victima transforms the data cache block that contains requested PTE into a cluster of TLB entries for costly-to-translate pages. On a L2 TLB eviction, Victima issues a PTW in the background, and a similar process is executed as in the case of L2 TLB miss. If the evicted TLB entry, predicted as a costly-to-translate page, is accessed again soon, the corresponding PTE can be obtained from the L2 cache without performing a costly PTW.

3 Design Details

3.1 TLB block layout in the L2 cache

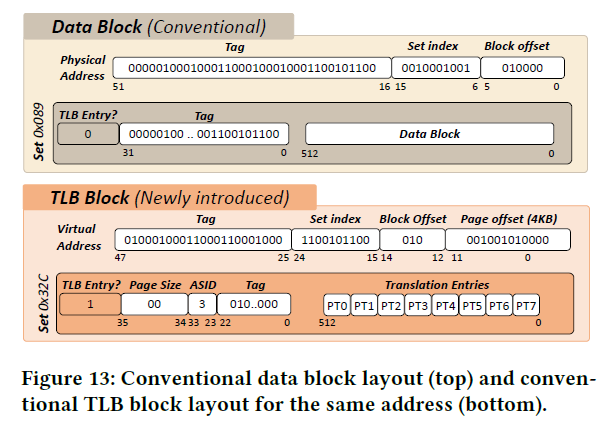

A conventional L2 cache block is accessed using a PA while a TLB block is accessed with a VA. To cope with this discrepancy, Victima suggests a new cache block layout that enables storing TLB entries. First, an additional bit is used to distinguish between a data block versus a TLB block. Second, a TLB block requires only a 23-bit tag, whereas a data block requires a 36-bit tag. The remaining 13-bit is used to avoid aliasing (ASID field in the Figure 13), and to store page size information.

Victima modifies the cache replacement policy to prioritize a TLB block over a data block. Upon insertion into the cache, the reuse distance is set to 0, which is smaller than that of a data block. Upon the selection of a replacement candidate, a TLB block is given one more chance to avoid eviction if translation pressure is high. Upon a cache hit, the reuse distance of a TLB block is reduced by a larger amount than a data block, thereby lowering the likelihood of eviction for a TLB block.

3.2 Inserting TLB blocks into the L2 cache

When an L2 TLB miss occurs, the PTW-CP first predicts if the requested page is costly-to-translate in the future. If the prediction is positive, the MMU checks whether the TLB block resides inside the L2 cache. If not, the MMU waits until the PTW is completed and transforms the cache block with a layout of a data block into a TLB block by updating tag, TLB bit, ASID, and the page size information.

When an L2 TLB eviction occurs, a similar process is executed. The difference is that the MMU triggers a PTW for a TLB block containing the TLB entry to be evicted from the L2 TLB.

3.3 Page table walk cost predictor (PTW-CP)

Victima employs two metrics to determine whether a page is costly-to-translate: PTW frequency and PTW cost. Feature selection and the decision of the threshold for each feature were carried out through some repetitive evaluations of the neural network. As a result, a neural network with 2 features and 6 hidden layers can be approximated as a circuit with several comparators, as shown in the Figure 15.